Computer vision and robotics applications ranging from augmented reality to robot autonomy in large-scale environments require spatio-temporal memory frameworks that capture both geometric structure for accurate language-grounding as well as semantic detail. Existing methods face a tradeoff, where producing rich open-vocabulary descriptions comes at the expense of real-time performance when these descriptions have to be grounded in 3D.

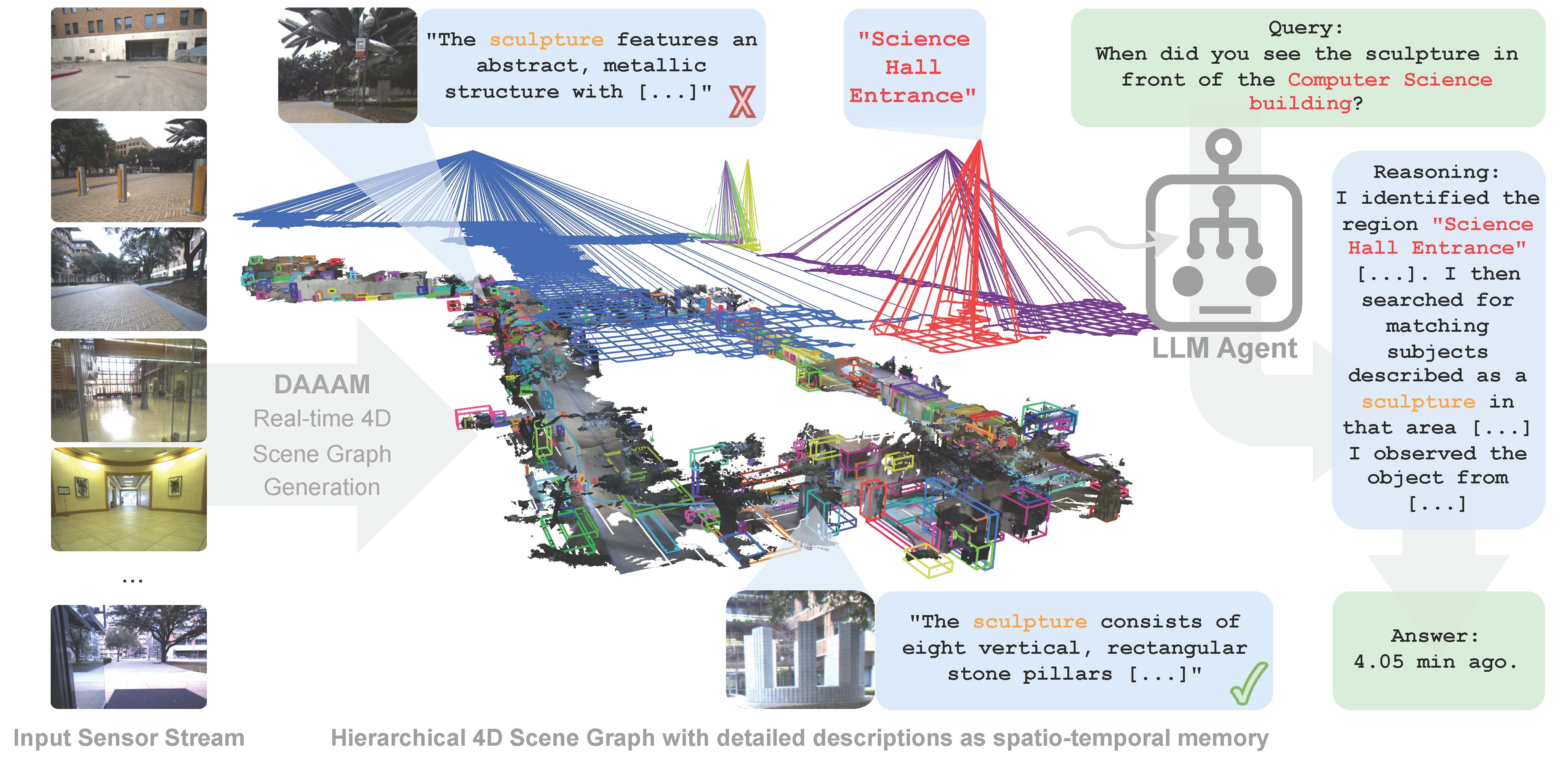

To address these challenges, we propose Describe Anything, Anywhere, at Any Moment (DAAAM), a novel spatio-temporal memory framework for large-scale and real-time 4D scene understanding. DAAAM introduces a novel optimization-based frontend to infer detailed semantic descriptions from localized captioning models, such as the Describe Anything Model (DAM), leveraging batch processing to speed up inference by an order of magnitude for online processing. It leverages such semantic understanding to build a hierarchical 4D scene graph (SG), which acts as an effective globally spatially and temporally consistent memory representation. DAAAM constructs 4D SGs with detailed, geometrically grounded descriptions while maintaining real-time performance. We show that DAAAM's 4D SG interfaces well with a tool-calling agent for inference and reasoning.

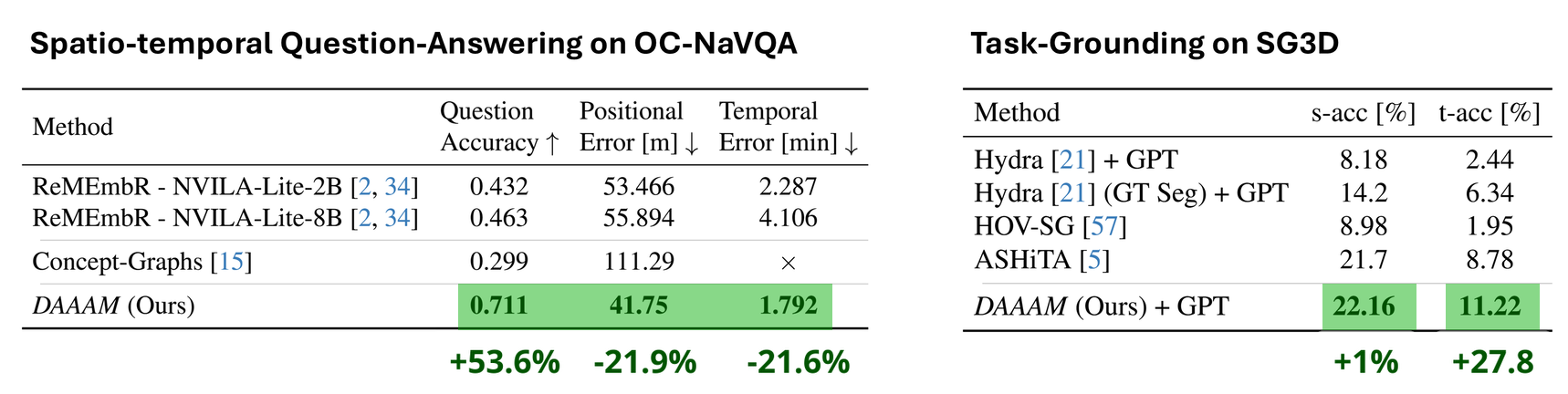

We thoroughly evaluate DAAAM in the complex task of spatio-temporal question answering on the NaVQA benchmark and show its generalization capabilities for sequential task grounding on the SG3D benchmark. We further curate an extended OC-NaVQA benchmark for large-scale and long-time evaluations. DAAAM achieves state-of-the-art results in both tasks, improving OC-NaVQA question accuracy by 53.6%, position errors by 21.9%, temporal errors by 21.6%, and SG3D task grounding accuracy by 27.8% over the most competitive baselines, respectively. We release our data and code open-source.

Given an RGB-D video stream, DAAAM first segments the scene into fragments and tracks them over time. We perform metric-semantic mapping to build a 4D map of the environment. To semantically lift the resulting map, we aggregate the tracked observations and select frames using an optimization-based frame selection algorithm. The selected frames and segments are batch-processed by the Describe Anything Model (DAM) to generate detailed descriptions for each object. The generated descriptions are incorporated back into the map and a 4D scene graph is constructed and clustered into semantically informed regions.

DAAAM enables spatio-temporal question answering in complex, large-scale environments. By building a hierarchical 4D scene graph with detailed descriptions, DAAAM can accurately answer questions about object locations, temporal events, and spatial relationships.

DAAAM achieves state-of-the-art results on both spatio-temporal question answering (OC-NaVQA) and sequential task grounding (SG3D) benchmarks.

If you find this useful for your research, please consider citing our paper:

@article{Gorlo2025DAAAM,

title={Describe Anything Anywhere At Any Moment},

author={Nicolas Gorlo and Lukas Schmid and Luca Carlone},

year={2025},

eprint={2512.00565},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2512.00565},

}